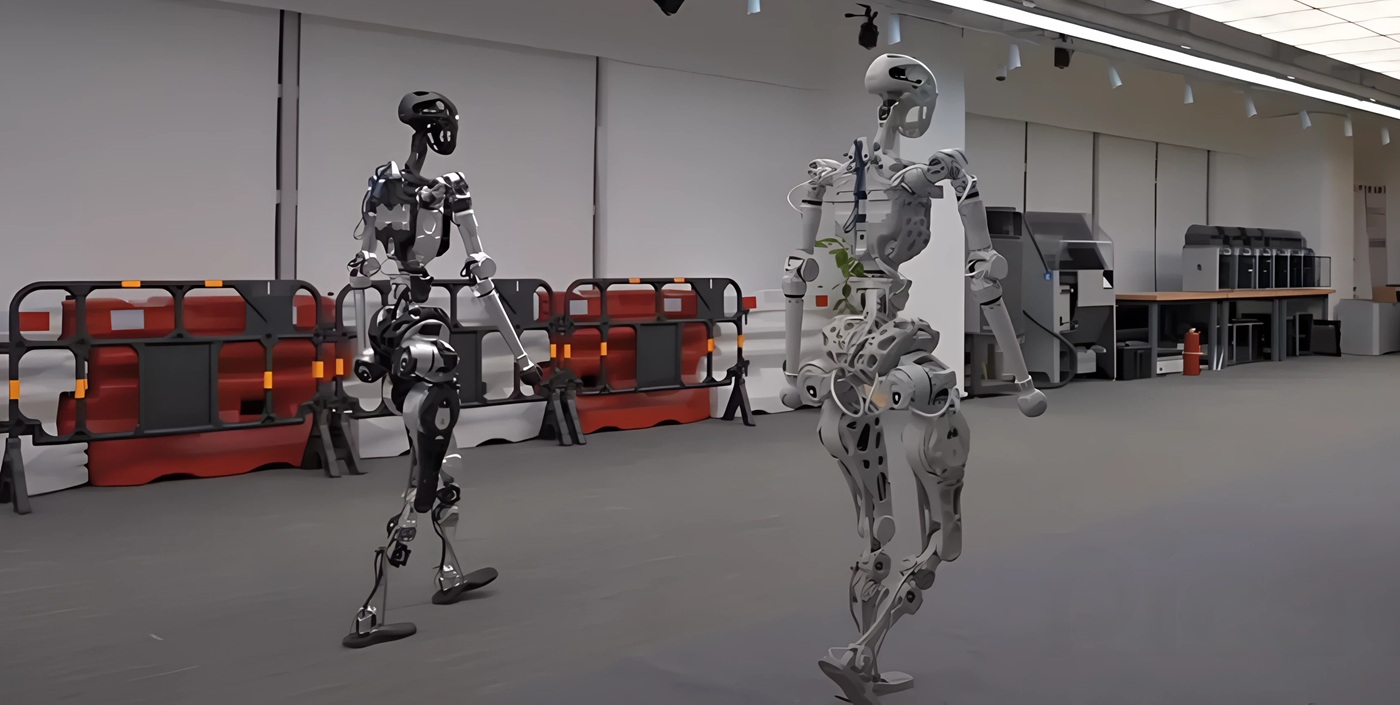

Humanoid walks like human after mastering smart learning

Adam’s RL-driven system adapts stride, pace, and balance in real time, ensuring stable, natural movement across uneven terrains.

Meet Adam, a cutting-edge humanoid robot with a proprietary reinforcement learning (RL) algorithm.

Refined through extensive simulations and vast training data, this innovative algorithm enables Adam to master human-like locomotion.

Since its initial design in June 2023, the PNDbotics team has continuously refined Adam’s key components, strengthening the legs and feet to ensure durability across diverse environments. The modular architecture of its actuators also enhances Adam’s flexibility, allowing it to adapt more effectively to dynamic and changing conditions.

“We have adopted the most advanced Deep Reinforcement Learning (DRL) and imitation learning algorithms, providing developers easy access to NVIDIA Isaac Gym parallel DRL training environment for developing individual algorithms,” reads the PNDbotics website.

Adaptive robot evolution

Traditional robot control algorithms, relying on precise mathematical models and predefined motion planning, have long demonstrated effective locomotion, as seen with Boston Dynamics’ Atlas and Spot robots using Model Predictive Control (MPC).

However, these methods often struggle in unknown or dynamically changing environments, where reliance on accurate modeling limits adaptability and complicates development.

To overcome these obstacles, researchers increasingly use deep RL, which gives robots more flexibility and resilience by allowing them to learn tactics through interaction with their surroundings independently.

Although RL has demonstrated potential in legged robots, its use in humanoid robots has been constrained by high costs, maintenance issues, and the difficulty of applying learned models to real-world scenarios.

The PNDbotic team created Adam, a motor-joint-driven humanoid robot with human-like motion, high-performance actuators, and affordable modularity, to address these problems. Adam uses an imitation learning framework and human motion data to attain remarkable locomotion performance.

In addition to making humanoid robotics research more accessible, this development opens new avenues for learning, adapting, and optimizing in challenging settings.

Reinventing robot mobility

Adam (Lite) is a 1.6-meter-tall, 60-kilogram humanoid robot with 25 quasi-direct drive (QDD) force-controlled PND actuators, delivering exceptional mobility and adaptability. Its legs feature high-sensitivity actuators capable of up to 360 Nm torque, while the arms offer five degrees of freedom and the waist three.

Advanced full-body motion control is made possible by Adam’s modular design, biomimetic torso, and specially designed full-stack system, which includes an Intel i7-powered PND Robot Control Unit and a real-time PDN network.

Thanks to this configuration, Adam is ready for real-world service scenarios, which facilitates neural network training and extensive dynamic simulations. Although vision modules and dexterous hands can be added, blind movement was the main emphasis of this work.

The team meticulously calibrated and converted the data to fit Adam’s particular structure before adding high-precision motion capture of specially created movements to public datasets to improve training. This method ensured adaptability across various complicated tasks by enabling exact model optimization.